Difference between revisions of "Development/Projects/LAC Scalability testing"

(→Limitations and Alternative Pilots: Fix caption) |

m (→Next Steps: Update link to AC dev page) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 369: | Line 369: | ||

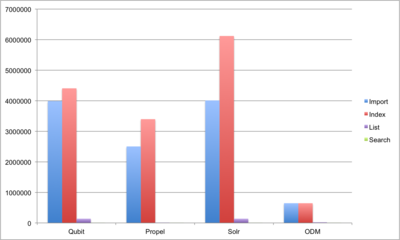

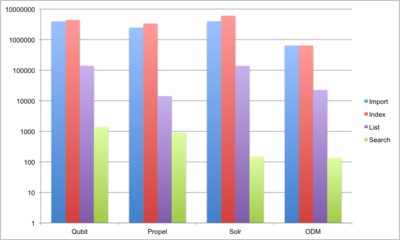

[[File:Import-index-t.png|400px|center|Import/index files: total time to process (seconds)']] | [[File:Import-index-t.png|400px|center|Import/index files: total time to process (seconds)']] | ||

<center>'''Import/index files: total time to process (seconds)'''</center> | <center>'''Import/index files: total time to process (seconds)'''</center> | ||

| + | <br /> | ||

| Line 399: | Line 400: | ||

==Next Steps== | ==Next Steps== | ||

| − | * See [[ArchivesCanada upgrade]] | + | * See [[Development/Projects/ArchivesCanada|ArchivesCanada upgrade]] |

Latest revision as of 11:09, 13 August 2015

Main Page > Development > Development/Projects > Development/Projects/LAC Scalability testing

Note

This is historical development documentation relating to scalability testing done for earlier versions of AtoM in 2011. It has been migrated here from a now-defunct wiki for reference. The content in this page was first created in January of 2011, and last updated June 14, 2011. This content was migrated to the AtoM wiki in July of 2015. Links have been updated where possible, and some presentation elements may have been to account for style differences between the two wikis. Otherwise, the content is the same.

Contents

Background

As the host of the Canadian Council of Archives (CCA) ArchivesCanada.ca database portal, Library Archives Canada (LAC) has been collaborating with the the CCA to evaluate a second generation technical platform for the national catalogue of archival descriptions (see Report of the CCA/LAC Joint Working Group on the National Catalogue, 13 April 2007).

The primary candidate under consideration is the open source ICA-AtoM archival description application that has been developed by the Vancouver firm, Artefactual Systems, under contract to the International Council on Archives and in collaboration with a number of archival institutions and associations, including LAC, CCA, and the Archives Association of British Columbia. (see The ICA-AtoM / BCAUL Pilot Project: Report to Library Archives Canada/Canadian Council of Archives, 07 April 2009).

Following the success of the ICA-AtoM pilot in British Columbia, the archival associations of Saskatchewan, Manitoba and Ontario are now working to migrate their own provincial catalogues to the ICA-AtoM platform by late 2011.

Technical Questions

Some outstanding technical questions remain about the scalability of the ICA-AtoM platform to accommodate the full national catalogue of descriptions from all of Canada’s archival institutions (including LAC) which would amount to tens of millions of records (the AABC’s provincial catalogue currently contains less than 100,000 records). The primary issues that need clarification include:

- Bulk import of millions of records

- Search index build performance

- Object-relational mapping component performance

- Search request response time

A related issue is the visualization and navigation of large data-sets but this is considered out of scope for this investigation as these usability scalability issues require separate testing and analysis techniques than those related to performance scalability. It should also be noted that many of the known usability scalability issues have already been dealt with in the user interface of the ICA-AtoM release 1.0.9-beta (through caching, paging and AJAX features).

Specific Questions

- impact of XML parsing overhead and libraries (eg. SimpleXML, XSLT) used?

- import processing in single request: parallelize (eg. drop/watch folder) or serialize (multiple steps)?

- identify entities to test (eg. InfoObject); is a related entity (eg. subject/term) desirable or necessary?

- how to group/atomize multiple index calls in import/add?

- can bulk or partial index rebuilds be done asynchronously?

- identify required vs. unnecessary SQL queries in ORM (David has started this)

- research how to group/atomize multiple queries in ORM

- identify sample problematic/expensive queries in ORM (eg. JOIN events)

Scalability Testing Project

To provide more information about the scope of these scalability issues and the possible technical options for addressing them, an ICA-AtoM scalability testing project using approximately 3,000,000 MARC archival descriptions from LAC's MIKAN system was proposed in a June 2009 meeting with representatives from LAC, CCA and Artefactual Systems. Since that meeting LAC has made the technical and legal arrangements for the transfer of these records.

Project Schedule

Start: January 3, 2011

End: March 16, 2011

Project Benefits

The results of this project are of direct benefit to LAC and CCA as the organizations with the mandated responsibility for maintaining and improving access to Canada’s national archival catalogue. The scalability testing results will either:

- Identify a feasible technical path forward to use ICA-AtoM as the next generation ArchivesCanada.ca platform and take advantage of the momentum that is building around this application within the Canadian archival community.

- Rule out ICA-AtoM as a technical platform and allow the LAC and CCA to move on and investigate other technical candidates for the national catalogue.

Furthermore, the project offers immediate benefit to LAC as practical research and development it can apply to the design and development its own MIKAN system and related projects that are addressing the online access to LAC’s catalogue of holdings. Namely, because it uses LAC’s own data to explore alternate data and index stores that directly address the question of scalability.

Testing Approach and Criteria

Approach & Assumptions

- see this page as starting point for proposed criteria for evaluation.

- test cases will be separated between: a) framework (eg. YAML/XML parsing), b) ORM read/write, and c) search index/query

- each test will stress each of the above components as independently as possible (difficult to separate Qubit framework from ORM)

- test cases are based on common use cases, eg. data migration, full reindex, querying, etc.

- testing does not include multi-language (i18n) records

- benchmarks are selected at 4 orders of magnitude (10^0, 10^5, 10^6, 10^7) to identify scalability profile (eg. linear, logarithmic, exponential):

- 1-2 objects: baseline to identify framework overhead

- 10K objects: largest presently known Qubit instance (MemoryBC)

- 350K objects: synthetic data set of Dublin Core records

- 3.5M objects: full data set from LAC MARC records

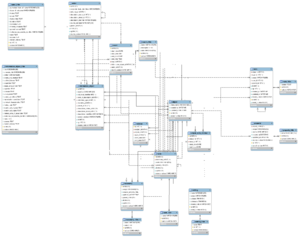

Data Model

Testing will be built around a "core" of the Qubit data model that focuses on the entities/relations that are of particular interest to data in the ArchivesCanada.ca portal (eg. information Object, term, actor, etc) and associated performance impacts, while removing aspects which are not critical (eg. user ACL, static pages) in order to simplify test setup.

Core data model for LAC testing

NB: Small image modified from full ERD, some relations not validated

Candidate Tool/Architectures

- Upgraded/re-integrated Propel ORM with Zend Search Lucene

- Migrate to Doctrine ORM

- Apache Solr for search index (NB: SolrCloud still under development)

Mulgara RDF triple store used in Fedora- NoSQL:

- May have to support JOIN relations, depending on Qubit domain model. We will have to port the data model to a document model.

- A MongoDB implementation would probably be fairly straightforward with Doctrine MongoDB ODM or sfMondongoPlugin, but would still require a separate indexing service

- Eliminate separate database and search index. Have single data store/index.

- ElasticSearch (NB: will require custom ODM, possibly ported from above)

- Solr has preliminary semi-join support, which may be moved to Lucene (and ES): SOLR-2272

Testing Plan

| Test Task | A: Baseline | B: Simplified ORM | C: Alternate ORM | D: Alternate Index | E: NoSQL ODM / Combined Index | |

|---|---|---|---|---|---|---|

| Qubit + ZSL | Propel + ZSL | Doctrine + ZSL | Qubit + Solr | ElasticSearch | | |

| 1. Import informationObjects

ORM/framework write |

Qubit ORM | Propel ORM | Doctrine ORM | 1A | ElasticSearch ODM | |

| 2. Export informationObjects

ORM/framework read |

Qubit ORM | Propel ORM | Doctrine ORM | 2A | ElasticSearch ODM | |

| 3. Index informationObjects

Index write |

ZSL Index | 3A | 3A | Solr Index | 1E | |

| 4. Search Query (>50% hits)

Index read |

ZSL Index | 4A | 4A | Solr Index | ElasticSearch Index | |

| YAML or SQL? |

|

|

|

|

|

|

Preliminary Results

XML Import of Fondoque EAD 2002 XML (975KB) on my 2.0GHz C2D (time in seconds, lower is better):

| 1A + 3A: Baseline | 1A + 3D: Alternate Index | 1E: NoSQL ODM / Combined index | 1A: Qubit ORM (no index) | 1B: Simplified ORM (no index) | 1F: NoSQL ODM (no index) | |

|---|---|---|---|---|---|---|

| Execution runs | 312.63 / 352.88 / 383.78 | 212.31 / 203.08 / 217.51 | 49.23 / 44.08 / 42.74 / 41.83 | 88.29 / 80.81 / 88.12 | 28.45 / 23.71 / 24.51 / 26.96 | 12.54 / 12.82 / 13.04 / 11.89 |

| Average | 349.8 | 211 (-60%) 1.6x | 44.5 (-87%) 7.9x | 85.7 | 25.9 (-70%) 3.3x** | 12.6 (-85%) 6.8x |

Reindex and search on same data set, same machine:

| 3A: ZSL (full reindex) | 3D: Solr (full reindex) | 4A: ZSL Search (63% hits) | 4D: Solr Search (63% hits) | |

|---|---|---|---|---|

| Execution runs | 1435.06 / 1448.93 | 112.9 / 114.67 / 115.41 / 114.53 | 2.78 / 2.81 / 2.84 / 2.77 | 0.15 / 0.07 / 0.07 / 0.09 |

| Average | 1442 | 114.4 (-92%) 13x | 2.8 (2 terms)* | 0.1 (-97%) 28x |

NB: These numbers are based on code-in-progress and may (very well likely) change significantly prior to the final benchmark plan.

*ZSL search response time increases approximately 1s for each additional query term

**most recent runs for Propel15 on this file are ~185s; need to investigate

XML Import of Braid Fonds XML (287KB) on same hardware (time in seconds, lower is better):

| 1A: Qubit ORM (no index) | 1B: Simplified ORM (no index) | 1E: NoSQL ODM / Combined index | 1F: NoSQL ODM (no index) | |

|---|---|---|---|---|

| Execution runs | 36.5 / 35.7 | 10.81 / 11.58 / 13.07 / 11.31 | 6.99 / 7.53 / 7.87 / 7.97 | 3.34 / 2.76 / 2.81 / 2.85 |

| Average | 36.1 | 11.7 (-68%) 3.1x | 7.6 (-79%) 4.8x | 2.9 (-92%) 12.4x |

Early NoSQL Prototype Background

ElasticSearch (ES) is like a cross between Solr and MongoDB, in many ways being the best of both worlds. I actually started originally with MongoDB because I wanted a platform that could — theoretically at least — scale to over a billion records (the same order as WorldCat). The original test set was 2.4 million MARC records.

As far as architecture goes, MongoDB and ES are hugely different at the core: MongoDB is written in C++ and uses MapReduce for querying; ES is written in Java and uses Lucene Query Syntax for querying (ES is built on top of Lucene). They are both highly RESTful (certainly much more so than Solr), and they both use master-slave replication and sharding to accomplish their scaling. I never got to test multi-node scaling with MongoDB, but with ES it is as easy as starting another instance on a machine on the same network — they auto-discover, auto-replicate, auto-failover, etc.

Unfortunately, MongoDB is a *database*, not a search index, which means that keyword searching within fields is both very limited and relatively slow. This combined with the fact that it has no support for fuzzy search, faceting, stemming, histograms, etc. means it would be necessary to write that functionality from scratch in PHP. Considering complex searching is a primary use case, MongoDB is basically a non-starter.

At the same time, some people have been using ES as a parallel index with MongoDB in much the same way we're using Zend Search Lucene (ZSL) in parallel with MySQL. Write operations in the ORM are committed to both the index and the DB, the index is used solely for keyword-type searching, and the DB is used for the usual relational-type stuff (but mostly for speed).

This seems like a massive duplication of data (two copies *in memory*), while at the same time revealing that, if an application isn't doing a lot of necessary JOINs on a regular basis, relations can be managed entirely in the model layer. Since NoSQL data stores have (arguably) no capacity for JOIN logic, they have to be done this way anyhow.

My early conclusion was that a single in-memory, keyword-searchable index combined with an ODM to manage relations ought to be sufficient for applications in which searching is a primary use case (It is likely that Qubit falls into this definition).

The other thing I realized was that search response time is *far* more important than DB access time, and that an in-memory DB wasn't strictly necessary as long as disk reads were reasonably quick. I switched from MySQL to SQLite, and then "cheated" a bit by putting the DB on a cheap-but-fast SSD. Access times were actually faster, and I had freed up all my RAM for the ES index. Even though the entire index (3.9GB) didn't fit into memory (2GB), cache misses were still about 500% faster with the SSD.

The big question that remains is whether ES is sufficient for a single data store — Shay Banon, the author, has been notoriously coy when answering questions around this, as well as comparisons with Solr:

"As to the question if elasticsearch can basically act as the single nosql solution of choice, namely the main storage of your data, it depends. First note, that elasticsearch is not a 1.0 version (I consider it a strong beta, some sites are about to go live with it any day now), so, I would consider not using it as the main data storage currently. This is for the simple reason that if something goes really bad, you can always reindex the data. I have worked and been involved with several projects that actually used Lucene as the main storage system of applications, and they were happy with it. Will elasticsearch become a possible main data storage? Depends. If what it provides fits the bill, and it goes GA, then go with it. If not (you need versioning, transactionality), then it can certainly be a complimentary solution to your nosql of choice." ([1])

As ES has matured, I think it is more likely to be suitable as a single data store, with the master ES node on the same machine (virtual or physical) as the application; but it would almost certainly require significant re-tooling in the ORM to accomplish this.

Test environments

B: Simplified ORM

The simplified ORM implements a clean install of the symfony 1.4 framework. The required testing components (Information Object list and read, EAD import and ZSL indexing/search) from the Qubit Toolkit have been migrated to the clean install, with as little modification as possible. To allow concrete table inheritance, which was made available in Propel 1.5, the sfPropel15Plugin was installed.

Note that concrete table inheritance does not allow a 100% reproduction of the Qubit data structure, but the difference should have minimal impact on the performance of the system as a whole.

Difficulties loading fixtures (pre-populated system data):

- Can't define self-references to an inherited column (e.g. Can't create a FK from information_object.parent_id to information_object.id, it must link to qubit_object.id)

- The fixtures (YAML) file requires all foreign key values to be a valid YAML key value, and therefore will not accept a string or integer value (see modifications to data/fixtures)

- This means that we can not, for instance, define an information object fixture with a pre-defined id as the information_object.id column is a foreign key to qubit_object.id

- i18n column values can not be represented with a YAML associative array (e.g. { en: test, fr: teste, es: prueba }). Trying to do so results in the string "Array" being imported into the database.

- The "source_culture" column for i18n joins is not present in the default symfony i18n tables. Must be a Qubit customization?

C: Alternate ORM

This case uses Doctrine 2.0 instead of default Doctrine 1.2 included in Symfony 1.4. sfDoctrine2Plugin is not finished yet so we integrated Doctrine without using the Symfony plugin system.

Doctrine 2 does not include yet some behaviours found in 1.2 like I18N or NestedSet (also in Propel 1.5), but we can make use of Translatable and Tree extensions from Doctrine2 Behavioral Extensions.

D: Alternate Index

The alternate index implements an updated version of the sfSolrPlugin using the latest version (r22) of the Solr PHP client and a clean install of the Solr 1.4.1 release. The Qubit components implementing search functions have been modified to use the sfSolrPlugin directly, and sfLucenePlugin (ZSL) and sfSearchPlugin have been removed from the codebase.

Solr has been configured to dynamically index all fields using the default TextField analyzer provided with the example configuration (schema.xml). All fields are copied to a single Solr field (to allow multi-field searching behaviour like ZSL), which is indexed but not stored.

Because sfSolrPlugin does not properly initialize on Symfony 1.4, it does not provide a "symfony lucene-rebuild" task as per the documentation. We will either have to write a custom symfony task or find an alternative way of doing a full index rebuild using Solr that compares reasonably with the ZSL "symfony search:populate" task.

E: Combined NoSQL ODM/Index

The combined ODM/index implements the Mondongo ODM using the sfMondongoPlugin for Symfony 1.4. It uses a custom-written library (dubbed 'Elongator') to integrate ElasticSearch 0.15 in place of MongoDB as the data store. Because ElasticSearch is built on Lucene, it also serves as the keyword search index, ie. in lieu of ZSL or Solr.

ElasticSearch is used as-provided without any configuration; it simply runs on the same machine as the Qubit-ODM installation. Current limitations of the ODM include:

- Can't define self-references to a document of the same class (ie. direct parent-child relationship); this is fixed in the beta2 release but not implemented in time

- i18n is not implemented but due to ODM design, performance impact is negligible either way so results won't be affected perceptably

- Due to porting SQL-based design concepts (e.g. root objects, relations table, Term constants), some inefficiency is built into the schema used

Final Test Configuration

Performance tests are accomplished a script using the Python Mechanize library to import a set of EAD XML files from a folder into each of the specified configurations. Importing is accomplished using a Symfony batch XML-import task, which provides the following benefits:

- records timing results and continues past import error (eg. segmentation faults in indexing)

- avoids performance skew due to web server/HTTP overhead, etc.

- records memory used during each test

Configuration C, Doctrine2 ORM will be omitted due to time constraints. Final testing configurations and requirements are:

A: Qubit + ZSL

- modified to ignore/skip ACL checks; this is to compare more closely with other configs and remove complexity from the Mechanize client

- requires: MySQL db for qubit-trunk

B: Propel + ZSL

- requires: MySQL db for qubit-propel

D: Qubit + Solr

- modified to ignore/skip ACL checks; this is to compare more closely with other configs and remove complexity from the Mechanize client

- requires: MySQL db for qubit-solr, Java JVM for Solr (servlet container is included)

E: Mondongo + ElasticSearch

- requires: Java JVM for ElasticSearch

Final Test Series

- Import EAD files with indexing DISABLED (ORM write)

- test will be run on 4 sets of EAD XML files converted from MARC: a) 1, b) 10K, c) 350K, d) 3.5M

- tests will be repeated to normalize results as follows: a) 5x, b) 3x, c) 2x, d) 1x

- List Information Objects (ORM read)

- test will be run after each import

- tests will be re-run 5 times to normalize results

- Import EAD files with indexing ENABLED (index write)

- test will be run on 4 sets of EAD XML files converted from MARC: a) 1, b) 10K, c) 350K, d) 3.5M

- tests will be repeated to normalize results as follows: a) 5x, b) 3x, c) 2x, d) 1x

- Search query with high hit-rate (index read)

- test will be run with a set of common terms: eg. "fonds", "series", "canada", "finding aid"

- tests will be re-run 5 times to normalize results

Limitations and Alternative Pilots

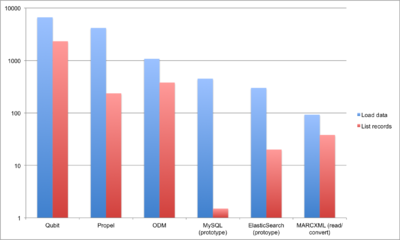

First attempts at 3,500 record data sets showed performance to be significantly worse on a large number of small files, i.e. 100 4KB EAD files with a single level of description took much longer to process than a single 400KB EAD file with a hundred levels of description. Estimates of the time required to complete testing ranged from a few weeks to several months. As a result, two pilot applications were developed that contained as little software as possible, but rather focused on the core goal of storing and loosely manipulating a simple representation of all 3.5 million records. The first configuration was a simplified MySQL-based data store with basic indexing enabled. The second configuration was an ElasticSearch instance with a small indexed JSON representation of each record. The two prototype configurations were designed to show the impact of the architectural difference between a SQL-based data store and a Lucene-based NoSQL one.

Scalability Profiles

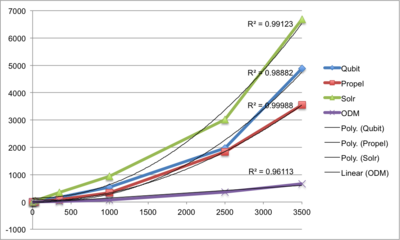

The Propel ORM scales better than the Qubit ORM by about 50% when writing, but does so at the cost of far greater memory consumption. Both ORMs, however, exhibit polynomial (i.e. square-power) decreases in performance across the data set. The ODM, by contrast, scales very close to linearly while remaining at least 300% faster than the others. Writing to the search index does not significantly change the performance characteristics of the ORM, showing a decrease in speed of 10% to 30%. Solr performance drops by almost 50% when records are loaded into the index as compared to the database alone. Because the ElasticSearch ODM is an integrated data store and index, it continues to scale at the same rate for both importing and indexing, since they are the same write action.

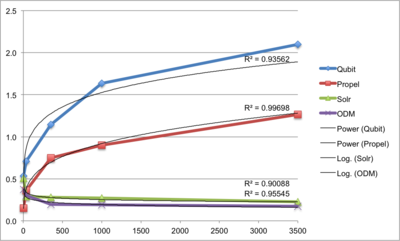

There is a major difference between the configurations using the ZSL search index (Qubit and Propel), and those using a dedicated Lucene-based index (Solr and ODM/ElasticSearch). The former reveal a very clear negative-power performance profile, while the latter show a decreasing logarithmic response curve. This result means that the ZSL index will scale poorly (or at best, linearly) for very large data sets, and response time will always increase with index size. Solr and ElasticSearch, on the other hand, will both settle upon a near-constant response time, regardless of index size.

Analysis & Findings

The current architecture is nearing the limits of acceptable response time for searching and reading from the ORM, but are still acceptable for importing a set of 3500 records. With the exception of improved search query performance, neither Propel nor Solr offer a substantial improvement. Based on linear projections of the tested performance profiles, we can estimate performance on a data set of 35,000 records. This projection fits quite closely with anecdotal data from the recent UNBC data migration. For collections of this size, the software still performs well on fast hardware, but the software is taxed. At 100,000 records, import and indexing speed remains acceptable.

Projections for 3.5 million records show the substantial difference in scalability limits when dealing with very large amounts of data. The relative differences between the configurations become greatly diminished as the quantity of data increases exponentially. ODM performance remains superior, but is still within approximately one order of magnitude for write operations and ORM/ODM reading. Memory use at 3.5 million records is within reasonable limits for a modern production system, with two notable exceptions. The fact that the Qubit ORM uses almost 300% more memory than Propel when reading indicates that there is significant room for improving its efficiency.

Based on the analysis above, it seems plausible that increases in performance and scalability for large archives in the range of 100,000 records is achievable through two major improvements: 1) targeting acute performance issues in the Qubit ORM relating to read performance and memory use when indexing. Conservatively assuming roughly a 30% to 60% increase in read performance and a 50% decrease in memory use are possible, this would allow the application to scale at least one more order of magnitude, i.e. into hundreds of thousands of archival descriptions.

2) replacing the ZSL-based indexing system with the Lucene- based Solr or ElasticSearch, allowing search to scale horizontally (indefinitely) to support very large and growing enterprise deployments

UPDATE: 1) is scheduled for release 1.2 in October 2012. 2) (using ElasticSearch) is scheduled for release 1.3 in April 2012