Difference between revisions of "Development/Projects/AABC Data Migration Toolkit/UNBC"

m (Dan moved page UNBC data migration to Development/Projects/AABC Data Migration Toolkit/UNBC: Maintain wiki hierarchy) |

(Fix spacing and links, add navigation footer) |

||

| Line 1: | Line 1: | ||

| − | {{#pagetitle:UNBC data migration}} | + | {{#pagetitle:AABC Toolkit: UNBC data migration}} |

| − | [[Main Page]] > [[Development]] > [[Development/Projects]] > [[Development/Projects/AABC Data Migration Toolkit]] > UNBC | + | [[Main Page]] > [[Development]] > [[Development/Projects]] > [[Development/Projects/AABC Data Migration Toolkit]] > UNBC |

| Line 12: | Line 12: | ||

Due to the pilot nature of the project, there were a number of unforeseen technical challenges and the project ran over schedule. | Due to the pilot nature of the project, there were a number of unforeseen technical challenges and the project ran over schedule. | ||

| + | |||

== Original sources for migration == | == Original sources for migration == | ||

| Line 29: | Line 30: | ||

Data from one (1) ICA-AtoM Release 1.0.9 instance (set up in late 2010?), including description and digital assets | Data from one (1) ICA-AtoM Release 1.0.9 instance (set up in late 2010?), including description and digital assets | ||

# http://www.ica-atom.org/unbc | # http://www.ica-atom.org/unbc | ||

| + | |||

== Data migrated == | == Data migrated == | ||

| Line 36: | Line 38: | ||

* The digital files from the ''Uploads.zip'' archive | * The digital files from the ''Uploads.zip'' archive | ||

* The ICA-AtoM Release 1.0.9 UNBC website (hosted by Artefactual) including digital assets | * The ICA-AtoM Release 1.0.9 UNBC website (hosted by Artefactual) including digital assets | ||

| + | |||

== Data not migrated == | == Data not migrated == | ||

| Line 45: | Line 48: | ||

* in the course of the project it was determined that the "Ray Williston Collection" data was present in the NBCA (MS SQL Server) database, so migration of the ''Ray Williston Collection.mdb'' database was not necessary | * in the course of the project it was determined that the "Ray Williston Collection" data was present in the NBCA (MS SQL Server) database, so migration of the ''Ray Williston Collection.mdb'' database was not necessary | ||

* The ''Fawcett.mdb'' database will be migrated after development of a [[AABC_Data_Migration_Toolkit#CSV Import]] methodology | * The ''Fawcett.mdb'' database will be migrated after development of a [[AABC_Data_Migration_Toolkit#CSV Import]] methodology | ||

| + | |||

=== Accession data === | === Accession data === | ||

| − | [ | + | [[Releases/Release announcements/Release 1.1|ICA-AtoM Release 1.1]] has no provision for storing accession data. The future [[Releases/Release announcements/Release 1.2|ICA-AtoM Release 1.2]] '''will''' include an accession module, and this data will be migrated at a future date. |

Accession data is stored in several tables in the NBCA database, and these were not migrated: | Accession data is stored in several tables in the NBCA database, and these were not migrated: | ||

| Line 55: | Line 59: | ||

# ''AppraiserSpecialty'' - links appraisers (Individual) to their area of specialty | # ''AppraiserSpecialty'' - links appraisers (Individual) to their area of specialty | ||

# ''Receipt'' - accession receipts? | # ''Receipt'' - accession receipts? | ||

| + | |||

=== Other MS SQL Server tables not migrated === | === Other MS SQL Server tables not migrated === | ||

| Line 60: | Line 65: | ||

# ''SpecifiedLocation'' - locations are specified at the Fonds level, and are very general (e.g. "Photograph room", "Mobile shelving", "On display in library"). UNBC agreed that migrating this data was not necessary. | # ''SpecifiedLocation'' - locations are specified at the Fonds level, and are very general (e.g. "Photograph room", "Mobile shelving", "On display in library"). UNBC agreed that migrating this data was not necessary. | ||

# ''Lookup'' - contained controlled vocabulary maintained in the ''Lookups'' table was not applicable in the ICA-AtoM application. | # ''Lookup'' - contained controlled vocabulary maintained in the ''Lookups'' table was not applicable in the ICA-AtoM application. | ||

| + | |||

== Project deliverables == | == Project deliverables == | ||

| Line 66: | Line 72: | ||

# Integration of linked digital assets | # Integration of linked digital assets | ||

# This process documentation | # This process documentation | ||

| + | |||

= Methodology = | = Methodology = | ||

| Line 78: | Line 85: | ||

# Correct any problems with the migration script found in the data audit | # Correct any problems with the migration script found in the data audit | ||

# Do the final migration of the data to the live UNBC site (hosted by Artefactual) | # Do the final migration of the data to the live UNBC site (hosted by Artefactual) | ||

| + | |||

= Extract = | = Extract = | ||

| Line 97: | Line 105: | ||

* Click checkbox next to backup filename | * Click checkbox next to backup filename | ||

* OK | * OK | ||

| + | |||

== Setup MS SQL Server for remote connections == | == Setup MS SQL Server for remote connections == | ||

| Line 112: | Line 121: | ||

* Default database "NBCA" | * Default database "NBCA" | ||

* OK | * OK | ||

| + | |||

=== Give read access to new login === | === Give read access to new login === | ||

| Line 136: | Line 146: | ||

* Click "SQL Server 2005 Services" in left pane | * Click "SQL Server 2005 Services" in left pane | ||

* In right pane, right-click "SQL Server (SQLEXPRESS)" and click "Restart" | * In right pane, right-click "SQL Server (SQLEXPRESS)" and click "Restart" | ||

| + | |||

=== Troubleshooting TCP/IP config === | === Troubleshooting TCP/IP config === | ||

| Line 142: | Line 153: | ||

* HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<InstanceName>\MSSQLServer\SuperSocketNetLib\Tcp | * HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<InstanceName>\MSSQLServer\SuperSocketNetLib\Tcp | ||

* HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<MSSQL.x>\MSSQLServer\SuperSocketNetLib\Tcp\IPAll | * HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<MSSQL.x>\MSSQLServer\SuperSocketNetLib\Tcp\IPAll | ||

| + | |||

== Connecting to MSSQL with PDI Spoon via JDBC == | == Connecting to MSSQL with PDI Spoon via JDBC == | ||

| Line 156: | Line 168: | ||

* Click OK | * Click OK | ||

| − | < | + | <admonition type="note> |

| + | '''NOTE:''' The "MS SQL Server (Native)" connector did not work in our tests, so the vanilla "MS SQL Server" connector was used | ||

| + | </admonition> | ||

<br style="clear:both" /> | <br style="clear:both" /> | ||

| + | |||

= Transform = | = Transform = | ||

| Line 484: | Line 499: | ||

== ICA-AtoM Release 1.0.9 data == | == ICA-AtoM Release 1.0.9 data == | ||

| − | Data from the existing http://ica-atom.org/unbc database was migrated to ICA-AtoM Release 1.1 data schema via the standard ICA-AtoM | + | Data from the existing http://ica-atom.org/unbc database was migrated to ICA-AtoM Release 1.1 data schema via the standard ICA-AtoM upgrade process. |

| + | |||

== Other transform sources == | == Other transform sources == | ||

| Line 491: | Line 507: | ||

[[File:Unbc-access-point-relations-ktr.png|thumb|'''AccessPointRelation.ktr''' '''PDI Spoon''' transform script creating description->actor (name) and description->term (subject, place) relations from access points]] | [[File:Unbc-access-point-relations-ktr.png|thumb|'''AccessPointRelation.ktr''' '''PDI Spoon''' transform script creating description->actor (name) and description->term (subject, place) relations from access points]] | ||

| + | |||

=== Creator name === | === Creator name === | ||

| Line 501: | Line 518: | ||

In the NBCA ''Fond'' and ''FondSeries'' source tables, the ''Keywords'' column contains a semi-colon separated list of strings. This data is defined via "Access Points" in the ICA-AtoM model. Because ICA-AtoM distinguishes between name, subject and place access points, and there is no automated way to categorize all of the original strings, this required a multi-part process: | In the NBCA ''Fond'' and ''FondSeries'' source tables, the ''Keywords'' column contains a semi-colon separated list of strings. This data is defined via "Access Points" in the ICA-AtoM model. Because ICA-AtoM distinguishes between name, subject and place access points, and there is no automated way to categorize all of the original strings, this required a multi-part process: | ||

| + | |||

# Export a comma-separated file containing all ''Keywords'' column data from the NBCA database (only present in ''Fond'' and ''FondSeries'' tables) | # Export a comma-separated file containing all ''Keywords'' column data from the NBCA database (only present in ''Fond'' and ''FondSeries'' tables) | ||

# Run automated normalization and data-cleaning using [http://code.google.com/p/google-refine/ Google Refine] (Artefactual) | # Run automated normalization and data-cleaning using [http://code.google.com/p/google-refine/ Google Refine] (Artefactual) | ||

# Manual data cleaning via '''Google Refine''' (UNBC) | # Manual data cleaning via '''Google Refine''' (UNBC) | ||

# Import of cleaned and normalized CSV file via '''PDI Spoon''' | # Import of cleaned and normalized CSV file via '''PDI Spoon''' | ||

| + | |||

The complex data structure of ICA-AtoM further complicated the import process (step #4) as can been seen in the '''AccessPointEntity.ktr''' and '''AccessPointRelation.ktr''' screenshots. | The complex data structure of ICA-AtoM further complicated the import process (step #4) as can been seen in the '''AccessPointEntity.ktr''' and '''AccessPointRelation.ktr''' screenshots. | ||

| + | |||

=== Digital asset import === | === Digital asset import === | ||

| − | Digital assets locations were exported from the NBCA MS SQL Server database to a CSV file via '''PDI Spoon''' transformation script then the actual digital assets were imported and linked to a description via the [[AABC_Data_Migration_Toolkit#Digital_object_load_task|digitalobject:load]] task. The transformation script also mapped the source id (''FondSeriesItemAttachement.FondSeriesItemId'') to the target key (''information_object.id'') via the ''keymap'' table, and did a string search & replace on the ''Attachment.SourcePath'' column to substitute the local path for each digital asset. | + | Digital assets locations were exported from the NBCA MS SQL Server database to a CSV file via '''PDI Spoon''' transformation script then the actual digital assets were imported and linked to a description via the [[Development/Projects/AABC_Data_Migration_Toolkit#Digital_object_load_task|digitalobject:load]] task. The transformation script also mapped the source id (''FondSeriesItemAttachement.FondSeriesItemId'') to the target key (''information_object.id'') via the ''keymap'' table, and did a string search & replace on the ''Attachment.SourcePath'' column to substitute the local path for each digital asset. |

<br style="clear:both"/> | <br style="clear:both"/> | ||

| Line 519: | Line 539: | ||

Data from the http://ica-atom.org/unbc site can be loaded via [http://dev.mysql.com/doc/refman/5.1/en/mysqldump.html mysqldump] backup file. This step must be performed before any other migrations because it destroys any existing data in the target database. | Data from the http://ica-atom.org/unbc site can be loaded via [http://dev.mysql.com/doc/refman/5.1/en/mysqldump.html mysqldump] backup file. This step must be performed before any other migrations because it destroys any existing data in the target database. | ||

| + | |||

== Load NBCA data == | == Load NBCA data == | ||

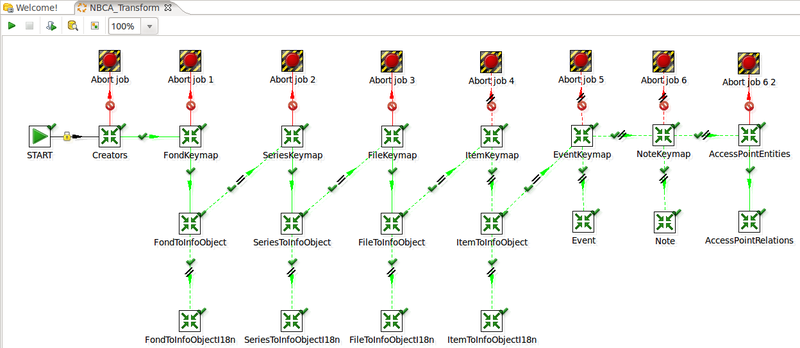

The '''PDI Spoon''' ''NBCA_Transform.kjb'' job writes data directly to the target ICA-AtoM MySQL database. | The '''PDI Spoon''' ''NBCA_Transform.kjb'' job writes data directly to the target ICA-AtoM MySQL database. | ||

| + | |||

== Load digital objects == | == Load digital objects == | ||

Digital objects are loaded using the [[AABC_Data_Migration_Toolkit#Digital_object_load_task|digitalobject:load]] task using the [[#Digital assets|nbca_digitalobjects.csv]] file to link assets to archival descriptions. | Digital objects are loaded using the [[AABC_Data_Migration_Toolkit#Digital_object_load_task|digitalobject:load]] task using the [[#Digital assets|nbca_digitalobjects.csv]] file to link assets to archival descriptions. | ||

| + | |||

= Post-processing = | = Post-processing = | ||

| Line 532: | Line 555: | ||

Several final tasks must be performed on the final ICA-AtoM instance data which require direct access to the ICA-AtoM/symfony framework | Several final tasks must be performed on the final ICA-AtoM instance data which require direct access to the ICA-AtoM/symfony framework | ||

| − | # Build nested set - See: [[ | + | # Build nested set - See: [[Development/Projects/AABC_Data_Migration_Toolkit#Build nested set task|Build nested set task]] |

| − | # Generate permalink slugs - See: [[ | + | # Generate permalink slugs - See: [[Development/Projects/AABC_Data_Migration_Toolkit#Generate slugs task|Generate slugs task]] |

| − | # Build search index - | + | # Build search index |

| + | |||

| + | |||

| + | |||

| + | ----- | ||

| + | |||

| + | * [[Development/Projects/AABC Data Migration Toolkit|Back to AABC Data Migration Toolkit project page]] | ||

| + | * [[Development/Projects|Back to Development projects]] | ||

| + | * [[Main Page|AtoM wiki home]] | ||

| + | |||

| + | |||

| + | [[Category:Development documentation]] | ||

Revision as of 18:57, 30 July 2015

Main Page > Development > Development/Projects > Development/Projects/AABC Data Migration Toolkit > UNBC

Contents

Overview

UNBC Archives is the first participant to be migrated as part of the AABC Data Migration Toolkit project. The original project specification included migrating data from MS SQL Server, MS Access and ICA-AtoM (Release 1.0.9) MySQL databases as well as linking over 5,000 digital asset files (image and video).

Data migration from the MS SQL Server data was performed via the Pentaho Data Integration (PDI) Spoon (pictured) extract, transform, load tool - see Technical Tool Options for other options considered, and reasons for selecting PDI.

Due to the pilot nature of the project, there were a number of unforeseen technical challenges and the project ran over schedule.

Original sources for migration

One (1) MS SQL Server database backup file

- NBCA_backup_201004080030.bak

Four (4) MS Access databases:

- Fawcett.mdb

- Ray Williston Collection.mdb

- Cassiar_Box1_on.mdb

- Cassiar-Box751onwards.mdb

One (1) archive (ZIP) file containing digital objects (image and video files):

- Uploads.zip

Data from one (1) ICA-AtoM Release 1.0.9 instance (set up in late 2010?), including description and digital assets

Data migrated

The final migrated data includes

- The NBCA MS SQL Server database data as detailed in the #NBCA database mappings section below

- The digital files from the Uploads.zip archive

- The ICA-AtoM Release 1.0.9 UNBC website (hosted by Artefactual) including digital assets

Data not migrated

MS Access databases

None of the four MS Access databases provided were migrated, for the following reasons

- the Cassiar fonds files (Cassiar_Box1_on.mdb, Cassiar-Box751onwards.mdb) were never scheduled for migration

- in the course of the project it was determined that the "Ray Williston Collection" data was present in the NBCA (MS SQL Server) database, so migration of the Ray Williston Collection.mdb database was not necessary

- The Fawcett.mdb database will be migrated after development of a AABC_Data_Migration_Toolkit#CSV Import methodology

Accession data

ICA-AtoM Release 1.1 has no provision for storing accession data. The future ICA-AtoM Release 1.2 will include an accession module, and this data will be migrated at a future date.

Accession data is stored in several tables in the NBCA database, and these were not migrated:

- Accession - accession and appraisal data

- Individual - most of the data in this table is specific to appraisers and donors

- AppraiserSpecialty - links appraisers (Individual) to their area of specialty

- Receipt - accession receipts?

Other MS SQL Server tables not migrated

- SpecifiedLocation - locations are specified at the Fonds level, and are very general (e.g. "Photograph room", "Mobile shelving", "On display in library"). UNBC agreed that migrating this data was not necessary.

- Lookup - contained controlled vocabulary maintained in the Lookups table was not applicable in the ICA-AtoM application.

Project deliverables

- Migration of all source data to a single ICA-AtoM Release 1.1 website (hosted by Artefactual)

- Integration of linked digital assets

- This process documentation

Methodology

- Migrate ICA-AtoM Release 1.0.9 data (with attached digital assets) to ICA-AtoM Release 1.1 data schema. See: Upgrading

- Install MS SQL Server 2005 Express and load the NBCA database from backup file provided

- Use the Pentaho Data Integration (PDI) Spoon tool to create a data migration script to migrate the NBCA database to the ICA-AtoM data model

- Attach digital assets from Uploads.zip archive to NBCA descriptions

- Refine the data migration script through several iterations

- Set up an install of ICA-AtoM for UNBC to audit the migrated data - including Release 1.0.9 data, NBCA database data, and all digital assets from both

- UNBC audits migrated data on the auditing site

- Correct any problems with the migration script found in the data audit

- Do the final migration of the data to the live UNBC site (hosted by Artefactual)

Extract

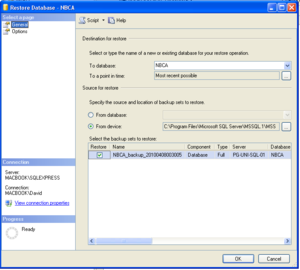

Restore UNBC MSSQL Backup

- Download and install SQL Server 2005 Express Edition with Advanced Services SP3

- Installion options

- Components: Management Studio Express, Full-text search, Connectivity Components, Replication?

- Mixed Authentication Mode (Windows and SQL Server)

- Add user to the SQL admin role

- Copy NBCA_backup_201004080030.bak to C:\Program Files\Microsoft SQL Server\MSSQL.1\MSSQL\Backup\NBCA_backup_201004080030.bak

- Run SQL Server Management Studio express

- Right-click "Databases" > Restore

- To database "NBCA" (or whatever).

- From device (browse to NBCA_backup_201004080030.bak)

- Click checkbox next to backup filename

- OK

Setup MS SQL Server for remote connections

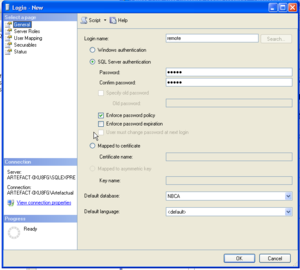

Create new SQL Server login

- In left pane of "Microsoft SQL Server Management Studio Express" click to expand "Security > Logins"

- Right-click either "Login" folder or in Login pane and select "New Login..."

- Enter username

- Select "SQL Server authentication"

- Enter password, and confirm

- Uncheck "Enforce password expiration"

- Default database "NBCA"

- OK

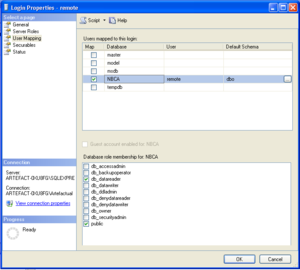

Give read access to new login

- Right-click newly created login, and select "Properties"

- Click "User-Mappings" in left pane

- Click checkbox next to "NBCA" database in top-right pane

- Click "db_datareader" permission in lower-right pane

- Click OK

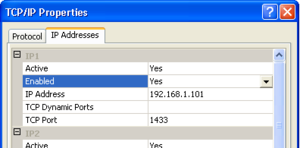

Turn on TCP/IP access

- "Start > Microsoft SQL Server 2005 > Configuration Tools > SQL Server Configuration Manager"

- In left pane, click "SQL Server 2005 Network Configuration > Protocols for SQLEXPRESS"

- Right-click "TCP/IP" in right pane, and click "Properties"

- click "IP Addresses" tab

- For desired TCP/IP connection select "Enabled: Yes", "TCP Dynamic Ports: <blank>", "TCP Port: 1433". OK (You will get a warning that server must be restarted for changes to effect) (See screenshot)

- Right-click "TCP/IP" and click "Enable" (Warning again)

- Click "SQL Server 2005 Services" in left pane

- In right pane, right-click "SQL Server (SQLEXPRESS)" and click "Restart"

Troubleshooting TCP/IP config

In several instances the static port settings above did not work properly with SQL Server 2005. Fixing this issue required modification the following Windows registry keys to set the Port value to 1433.

- HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<InstanceName>\MSSQLServer\SuperSocketNetLib\Tcp

- HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\<MSSQL.x>\MSSQLServer\SuperSocketNetLib\Tcp\IPAll

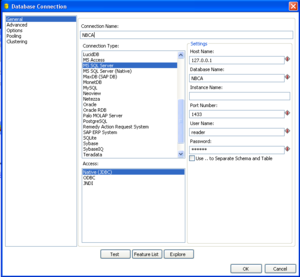

Connecting to MSSQL with PDI Spoon via JDBC

PDI Spoon is a Java GUI for creating PDI Transformation scripts. We will use it to connect to MSSQL with the JDBC connector. Please read the Spoon documentation for general instructions on using the tool.

- Add new "Input > Table input" step

- Double-click the Table input step

- Click the "New" connection button,

- Select the MS SQL Server connector, and fill in the form like the screenshot below

- Click "Test"

- Click OK

Note

NOTE: The "MS SQL Server (Native)" connector did not work in our tests, so the vanilla "MS SQL Server" connector was used

Transform

NBCA database mappings

Table: Fond

| Source column | Dest. column | Notes |

|---|---|---|

| Id | keymap.source_id | keymap table maps source keys to target keys |

| Accession | note_i18n.content | Store as RAD "Alpha-numeric designations" note |

| Title | information_object_i18n.title | |

| DerivedNameOne | none | calculated value based on Title. Not migrated |

| DerivedNameTwo | none | calculated value based on Title. Not migrated |

| BeginCirca | event.start_date | Parse regular dates (e.g. "22 February 1960", "Jul. 1983", "1922") to event.start_date (date type). Copy all (regular and irregular) dates to event_i18n.date (string type) |

| EndCirca | event.end_date | Parse regular dates (e.g. "22 February 1960", "Jul. 1983", "1922") to event.end_date (date type). Copy all (regular and irregular) dates to event_i18n.date (string type) |

| Restrictions | information_object_i18n.access_conditions | RAD label "Restrictions on access" |

| Copyright | none | All rows are blank in source table |

| SourceOfTitle | note_i18n.content | RAD note type: "Source of title proper" |

| TitleAndAccessNotes | none | All rows are blank in source table |

| PhysicalDescription | information_object_i18n.extent_and_medium | |

| Conservation | none | All rows are blank in source table |

| ScopeAndContent | information_object_i18n.scope_and_content | |

| Series | none | Series list will be derived from parent/child relationship |

| PhysicalDescriptionNotes | none | All rows are blank in source table |

| CustodialHistoryPrivate | none | ICA-AtoM doesn't currently have a column for storing "private" data. Migrate with accession data after Release 1.2 |

| CustodialHistoryPublic | information_object_i18n.archival_history | The "archival_history" column is labeled "Custodial history" in the RAD template. |

| CreatorHistory | none | Source column contains a mix of: creator name, dates of existence and immediate source of acquisition. Normalized creator name is imported from UNBC edited csv file "creator.csv" |

| ProvenanceNotes | none | All rows are blank in source table |

| KeyWords | term_i18n.name, actor_i18n.authorized_form_of_name | Exported to csv file. UNBC manually normalized CSV data to name, place or subject "access points" using Google Refine. The modified csv file was then imported using PDI Spoon |

| BioAdminHistory | actor_i18n.history | |

| BioAdminHistNotes | none | All rows are blank in source table |

| LangaugeNotes | none | Only three rows in Fonds table have non-English languages, migrate manually |

| Record | none | One row contains "FOR-17800", migrate manually |

| Arrangement | information_object_i18n.arrangement | |

| FindAids | information_object_i18n.finding_aids | |

| GeneralNotes | notes_i18n.content | General note type |

| ArchivistId | none | Ran out of time to migrate the archivist name to "revision history" |

| ShowOnWeb | status.status_id | false = "Draft" (status_id: 159), true = "published" (status_id: 160) |

| CreatedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| CreatedOn | object.created_at | |

| ModifiedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| ModifiedOn | object.updated_at | |

| Deleted | none | Manually remove 'deleted' rows from Qubit. Can't do this during migration because of data integrity issues, some deleted rows have non-deleted children |

Table: FondSeries

| Source column | Dest. column | Notes |

|---|---|---|

| Id | keymap.source_id | The keymap table maps source keys to target keys |

| Accession | note_i18n.content | Store as RAD "Alpha-numeric designations" note |

| Title | information_object_i18n.title | Trim trailing periods from title in transformation |

| DerivedNameOne | none | Calculated value based on Title. Not migrated |

| DerivedNameTwo | none | Calculated value based on Title. Not migrated |

| FondId | information_object.parent_id | Foreign key self-relation. Use keymap table to lookup parent (Fond) target key |

| BeginCirca | event.start_date | Parse regular dates (e.g. "22 February 1960", "Jul. 1983", "1922") to event.start_date (date type). Copy all (regular and irregular) dates to event_i18n.date (string type) |

| EndCirca | event.end_date | Parse regular dates (e.g. "22 February 1960", "Jul. 1983", "1922") to event.start_date (date type). Copy all (regular and irregular) dates to event_i18n.date (string type) |

| SourceOfTitle | note_i18n.content | RAD note type: "Source of title proper" |

| TitleAndAccessNotes | none | All rows are blank in source table |

| PhysicalDescription | information_object_i18n.extent_and_medium | |

| ScopeAndContent | information_object_i18n.scope_and_content | |

| PhysicalDescriptionNotes | none | All rows are blank in source table |

| BioAdminHistory | none | Field data is often inconsistent with intended use. Can't determine authoritative name for person or organization |

| KeyWords | none | All rows are blank in source table |

| CustodialHistoryPrivate | none | All rows are blank in source table |

| CustodialHistoryPublic | information_object_i18n.archival_history | The "archival_history" column is labeled "Custodial history" in the RAD template. |

| CreatorHistory | none | All rows are blank in source table |

| ProvenanceNotes | none | All rows are blank in source table |

| Record | none | 16 rows in database, all part of "Aleza Lake Research Forest Archival Records fonds". Migrate manually |

| Arrangement | information_object_i18n.arrangement | |

| GeneralNotes | notes_i18n.content | General note type |

| ArchivistId | none | Ran out of time to migrate the archivist name to "revision history" |

| ShowOnWeb | status.status_id | false = "Draft" (status_id: 159), true = "published" (status_id: 160) |

| CreatedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| CreatedOn | object.created_at | |

| ModifiedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| ModifiedOn | object.updated_at | |

| Deleted | none | Manually remove 'deleted' rows from Qubit. Can't do this during migration because of data integrity issues, some deleted rows have non-deleted children |

Table: FondSeriesFile

| Source column | Dest. column | Notes |

|---|---|---|

| Id | keymap.source_id | The keymap table maps source keys to target keys |

| Accession | note_i18n.content | Store as RAD "Alpha-numeric designations" note |

| Title | information_object_i18n.title | Trim trailing periods from title in transformation |

| DerivedNameOne | none | Calculated value based on Title. Not migrated |

| DerivedNameTwo | none | Calculated value based on Title. Not migrated |

| FondSeriesId | information_object.parent_id | Foreign key self-relation. Use keymap table to lookup parent (FondSeries) target key |

| CreationCirca | event.date | Start date may be copied to event.start_date, if the format is recognized |

| SourceOfTitle | note_i18n.content | RAD note type: "Source of title proper" |

| Copyright | none | All rows are blank in source table |

| AccessRestrictions | information_object_i18n.access_conditions | RAD label "Restrictions on access" |

| TitleAndAccessNotes | note_i18n.content | Contains a mix of "Physical condition", "Cartographic scale", Physical location, previous Accession #'s and general notes. Migrated as "General Note" |

| GeneralMaterialDesignation | none | All rows are blank in source table |

| PhysicalDescription | information_object_i18n.extent_and_medium | |

| LocationId | none | All rows are blank in source table |

| ScopeAndContent | information_object_i18n.scope_and_content | |

| Conservation | note_i18n.content | "Conservation" note type |

| PhysicalDescriptionNotes | none | All rows are blank in source table |

| BioAdminHistory | none | Field data is mostly inconsistent with intended use. Can't algorithmically determine authoritative name for person or organization |

| KeyWords | none | All rows are blank in source table |

| CustodialHistoryPrivate | none | ICA-AtoM doesn't currently have a column for storing private data. Migrate with accession data after Release 1.2? |

| CustodialHistoryPublic | none | All rows are blank in source table |

| ProvenanceNotes | none | All rows are blank in source table |

| PhotographerOrArtist | note_i18n.content | Migrated as "General note" |

| Record | none | Appropriate RAD designation is unclear. Ran out of time to migrate (146 rows contain data) |

| Arrangement | none | All rows are blank in source table |

| GeneralNotes | notes_i18n.content | General note type |

| ArchivistId | none | Ran out of time to migrate the archivist name to "revision history" |

| ShowOnWeb | status.status_id | false = "Draft" (status_id: 159), true = "published" (status_id: 160) |

| CreatedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| CreatedOn | object.created_at | |

| ModifiedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| ModifiedOn | object.updated_at | |

| Deleted | none | Manually remove 'deleted' rows from Qubit. Can't do this during migration because of data integrity issues, some deleted rows have non-deleted children |

Table: FondSeriesItem

| Source column | Dest. column | Notes |

|---|---|---|

| Id | keymap.source_id | The keymap table maps source keys to target keys |

| Accession | note_i18n.content | Store as RAD "Alpha-numeric designations" note |

| Title | information_object_i18n.title | Trim trailing periods from title in transformation |

| DerivedNameOne | none | Calculated value based on Title. Not migrated |

| DerivedNameTwo | none | Calculated value based on Title. Not migrated |

| FondSeriesFileId | none | All rows blank in source table |

| FondSeriesId | information_object.parent_id | Foreign key self-relation. Use keymap table to lookup parent (FondSeries) target key |

| CreationCirca | event.date | In addition, start date may be copied to event.start_date, if the format is recognized |

| SourceOfTitle | note_i18n.content | RAD note type: "Source of title proper" |

| Copyright | none | One value for "Bob Bossin’s Home Remedy for Nucelar War" Show. Migrate manually |

| AccessRestrictions | information_object_i18n.access_conditions | RAD label "Restrictions on access" |

| TitleAndAccessNotes | note_i18n.content | Contains a mix of "Source of title proper", "Terms governing use, reproduction and publication", "Attributions and conjectures" (?) and general notes. Migrated to "General notes" |

| GeneralMaterialDesignation | object_term_relation.term_id | Match closest available term in "General material designation" taxonomy |

| PhysicalDescription | information_object_i18n.extent_and_medium | |

| ScopeAndContent | information_object_i18n.scope_and_content | |

| Conservation | note_i18n.content | "Conservation" note type |

| PhysicalDescriptionNotes | none | All rows are blank in source table |

| BioAdminHistory | none | Field data is mostly inconsistent with intended use (mostly "Part of" designation). Can't algorithmically determine authoritative name for person or organization |

| KeyWords | term_i18n.name, actor_i18n.authorized_form_of_name | Exported to csv file. UNBC manually normalized CSV data to name, place or subject "access points" using Google Refine. The modified csv file was then imported using PDI Spoon |

| CustodialHistoryPrivate | none | ICA-AtoM doesn't currently have a column for storing private data. Migrate with accession data after Release 1.2? |

| CustodialHistoryPublic | none | All rows are blank in source table |

| ProvenanceNotes | none | All rows are blank in source table |

| PhotographerOrArtist | note_i18n.content | Migrated as "General note" |

| Record | none | Appropriate RAD designation is unclear. Ran out of time to migrate (3561 rows contain data) |

| Arrangement | none | All rows are blank in source table |

| GeneralNotes | notes_i18n.content | General note type |

| ArchivistId | none | Ran out of time to migrate the archivist name to "revision history" |

| ShowOnWeb | status.status_id | false = "Draft" (status_id: 159), true = "published" (status_id: 160) |

| CreatedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| CreatedOn | object.created_at | |

| ModifiedBy | none | Machine generated data that is not relevant to ICA-AtoM |

| ModifiedOn | object.updated_at | |

| Deleted | none | Manually remove 'deleted' rows from Qubit. Can't do this during migration because of data integrity issues, some deleted rows have non-deleted children |

Table: Attachment

| Source column | Dest. column | Notes |

|---|---|---|

| SourcePath | digitalobject.path | See #Digital asset import below for details |

| Remaining columns in this table were not migrated | ||

Table: FondSeriesItemAttachement

| Source column | Dest. column | Notes |

|---|---|---|

| AttachmentId | digitalobject.information_object_id | See #Digital asset import below for details |

| FileSeriesItemId | information_object.id | See #Digital asset import below for details |

| Remaining columns in this table were not migrated | ||

ICA-AtoM Release 1.0.9 data

Data from the existing http://ica-atom.org/unbc database was migrated to ICA-AtoM Release 1.1 data schema via the standard ICA-AtoM upgrade process.

Other transform sources

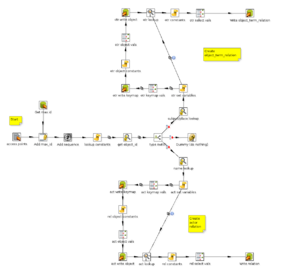

Creator name

The NBCA.Fond table had no standard field for recording the creator of a Fonds, so the CreatorHistory and Keywords columns were used in an ad-hoc manner. Because neither the name format nor the column used was consistent, there was no automated way to extract the name of the Fonds' creator. The solution was to create a CSV export file from the NBCA Fond table and for the UNBC archivists to manually enter a creator name, where the creator was known.

The creator.csv file was created by export of the NBCA Fond table (columns: Id, Title, CreatorHistory and BioAdminHistory) and was then manually edited by the UNBC Archives staff. The edited creator.csv file is loaded into ICA-AtoM by the PDI Spoon Transformation "Creators.ktr".

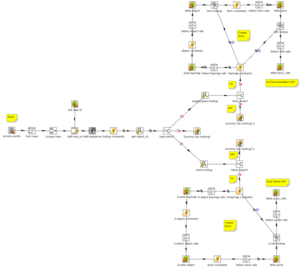

Keywords

In the NBCA Fond and FondSeries source tables, the Keywords column contains a semi-colon separated list of strings. This data is defined via "Access Points" in the ICA-AtoM model. Because ICA-AtoM distinguishes between name, subject and place access points, and there is no automated way to categorize all of the original strings, this required a multi-part process:

- Export a comma-separated file containing all Keywords column data from the NBCA database (only present in Fond and FondSeries tables)

- Run automated normalization and data-cleaning using Google Refine (Artefactual)

- Manual data cleaning via Google Refine (UNBC)

- Import of cleaned and normalized CSV file via PDI Spoon

The complex data structure of ICA-AtoM further complicated the import process (step #4) as can been seen in the AccessPointEntity.ktr and AccessPointRelation.ktr screenshots.

Digital asset import

Digital assets locations were exported from the NBCA MS SQL Server database to a CSV file via PDI Spoon transformation script then the actual digital assets were imported and linked to a description via the digitalobject:load task. The transformation script also mapped the source id (FondSeriesItemAttachement.FondSeriesItemId) to the target key (information_object.id) via the keymap table, and did a string search & replace on the Attachment.SourcePath column to substitute the local path for each digital asset.

Load

Load existing ICA-AtoM install data

Data from the http://ica-atom.org/unbc site can be loaded via mysqldump backup file. This step must be performed before any other migrations because it destroys any existing data in the target database.

Load NBCA data

The PDI Spoon NBCA_Transform.kjb job writes data directly to the target ICA-AtoM MySQL database.

Load digital objects

Digital objects are loaded using the digitalobject:load task using the nbca_digitalobjects.csv file to link assets to archival descriptions.

Post-processing

Several final tasks must be performed on the final ICA-AtoM instance data which require direct access to the ICA-AtoM/symfony framework

- Build nested set - See: Build nested set task

- Generate permalink slugs - See: Generate slugs task

- Build search index