AABC Data Migration Toolkit

Main Page > Development > Development/Projects > Development/Projects/AABC Data Migration Toolkit

Note

This is historical documentation that refers to the migration of data from legacy systems to ICA-AtoM. This page was first created in April 2011, and its content was last updated in April 2012. Its contents were migrated to the new AtoM wiki on July 8, 2015: no changes have been made to the original content, though some formatting may have been changed for greater readability, or to account for differences in styles between the two wikis. Dead external links may also have been removed. Otherwise, the content itself has not been altered.

Contents

- 1 Background and Objectives

- 2 Project Participants

- 3 Technical Tool Options

- 4 Methodology

- 5 Implementation

Background and Objectives

The AABC Data Migration Toolkit project is a collaboration between the Archives Association of British Columbia (AABC), Artefactual Systems, the Anglican Diocese of New Westminster Archives, Simon Fraser University Archives, the University of Victoria, and the University of Northern British Columbia Archives to migrate data from legacy database systems used in the BC archival community to ICA-AtoM. The project will begin November 2010 and be completed by April 2011.

The project will analyze and select appropriate technical tool(s) to use in the migration process as well as develop a generic methodology to assist those doing data migration projects. This 'toolkit' should also be useful to other data migration projects, not just those migrating data into ICA-AtoM.

Project Participants

- National Archival Development Program (funding)

- Archives Association of British Columbia (coordination)

- Artefactual Systems (technical lead & coordination)

- Anglican Church Archives (InMagic migration & funding)

- Simon Fraser University Archives (FileMaker migration & funding)

- University of Victoria Archives (MS-Word migration & funding)

- University of Northern British Columbia Archives (MS-SQLserver migration & funding)

Technical Tool Options

Drupal-based tool(s)

There are a number of Drupal import/export modules available.

The Migrate module developed by Cyrve looks interesting. Cyrve has also published their migration methodology [1] [2].

Presumably we can use Drupal CCK and Views to model almost any data structure. Then we can use export functionality or other internal data migration tools to map it and export it to Qubit data structure?

Also, once we get external data into Drupal we could, theoretically, expose it as RDF data to get it out into other systems:

- http://drupal.org/project/rdf

- http://openspring.net/blog/2009/10/22/produce-and-consume-linked-data-with-drupal

See also: The eXtensible Catalog (XC) Drupal Toolkit

Reasons for elimination of Drupal-based tools

Ultimately any Drupal based tool is going to involve a two step migration process

- Migrate data Drupal schema (via CCK?)

- Migrate data to Qubit schema (via RDF?)

The extra step of migrating data to Drupal is a significant amount of extra work, without any concrete benefits. The difficulty of migrating the data to Drupal is exacerbated by the complex nature of the source and Qubit schemas. Implementing an RDF schema for Drupal and Qubit would have benefits beyond the scope of this project, but requires a significant amount of extra work that is not within the scope of the project. In addition any Drupal modules are, by nature, a extension on the Drupal framework, which will involve compromises that are not required for task specific ETL tools.

Using Drupal as a migration tool also requires installing an configuring Drupal, plus the necessary Drupal modules (CCK, View, Migrate, RDF, etc.); This involves significantly more work then installing a stand-alone ETL desktop application.

Google Refine (formerly Freebase Gridworks)

Reasons for elimination of Google Refine

Google refine only works with flat-files [3] (version 2.0 2010-11-25) so it's not an option for Filemaker, MSSQL, MySQL or other SQL formats, which are part of our requirements.

Open-source ETL tool(s)

Wikipedia list of Extract, transform, load (ETL) tools

It's tough finding any decent (unbiased) information on any ETLs and the open source ones especially. A couple of names cropped up multiple times in my searches:

Pentaho Data Integration (Kettle) - community edition (FOSS)

- screencast: http://www.pentaho.com/products/demos/PDI_overview/PDI_overview.html (skip to ~5m00s for live demo)

- screencast: http://www.talend.com/demo/index.php

It sounds like either tool should be fine for small-scale projects like the ones we are tackling. Both Kettle and Talend OS use a Java GUI and thus run on Linux, Windows and Mac. Blog comparison of Pentaho Kettle and Talend

Talend immediately annoyed me by offering the dreaded "feature comparison matrix" for it's own products and being pretty obtuse about which one is their open-source offering, as well as asking me to register to view their demos. >:-( So, Kettle beats Talend for first try by virtue of having the least annoying website.

I also checked out the screencast for Apatar (which has an EC2 AMI - cool) but I didn't find much information about it other than Wikipedia and their own website. Apatar has a similar GUI to Kettle and Talend. Apatar's puts a lot of emphasis on it's web-service connectors - which include Salesforce, SugarCRM, Amazon S3, but this functionality is not required or beneficial for this project.

Also checked out the Scriptella site. Scriptella is very appealing at first glance because of it's non-corporate web presence and it's claims of simplicity. I was thrown off a bit when I found a post questioning whether Scriptella was dead [4], but they released the 1.0 version in May, 2010 which is somewhat reassuring. However Scriptella does look like a one-man show which is a bit worrying. Scriptella looks like it's command line + config files, so no GUI , which significantly raises the barrier to entry for non-programmers. Random article about Scriptella

Reasons for selection of Pentaho Data Integration (Kettle)

Pentaho Data Integration (PDI) Community Edition (also known as Kettle) is a purpose built ETL tool with a feature set that matches and exceeds our requirements.

The Community Edition of PDI is open-source (LGPL version 2), which meshes well with the open-source philosophy of Qubit. PDI uses a graphical user interface (GUI) running on a Java Virtual Machine (several open-source JVMs are available) which allows the application to run, without functional differences, on Mac, Linux or Windows platforms.

Installing PDI is straight-forward, though it does require installing a suitable JVM as well. This compares favorably with having to install a Drupal-based migration toolset.

There is a steep learning curve for using PDI, especially with regard to the concurrent (multi-thread) and non-sequential property of the transformations. However, PDI's documentation is good, and includes several video and text tutorials, and the PDI community forum is quite active. The migrations that we develop for our pilot organizations and our own documentation should also help ease the learning process.

Comma Seperated Values (CSV) import

Reasons for selection of CSV import as preferred migration path

needs update (note: reserve Pentaho as option for complex migrations but not likely a tool that will be easily implemented by most archivists)

Methodology

"Real life ETL Cycle" (from Wikipedia [5], 2010-12-02)

- Cycle initiation

- Build reference data

- Extract (from sources)

- Validate

- Transform (clean, apply business rules, check for data integrity, create aggregates or disaggregates)

- Stage (load into staging tables, if used)

- Audit reports (for example, on compliance with business rules. Also, in case of failure, helps to diagnose/repair)

- Publish (to target tables)

- Archive

- Clean up

1. Migration using Pentaho

Extract

Source Data Formats:

- MS SQL Server: UNBC data migration

- Filemaker Pro 10: SFU data migration

Transform

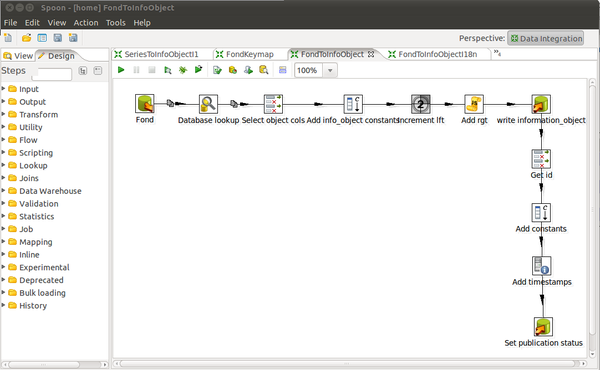

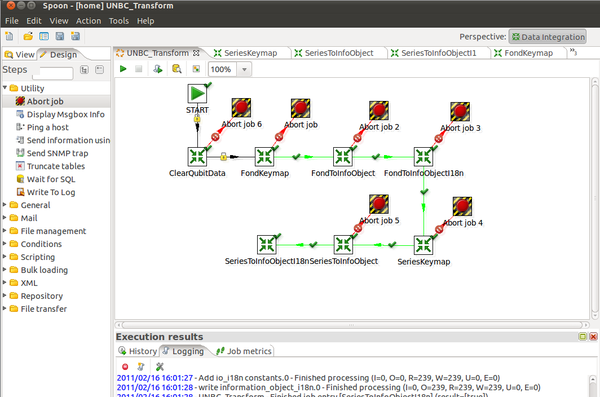

Pentaho Data Integration (PDI) includes a GUI interface for designing and running transformations named "spoon". The screenshots below show the spoon interface.

PDI organizes the individual "Steps" for transforming and mapping data into "Transformations". Data flows between Steps through "Hops" (the arrows) in an non-sequential and simultaneous fashion. This simultaneous execution of steps can be non-intuitive so the user must be careful not to assume what data will already be migrated (e.g. for foreign key relations).

Several "Transformations" can be further grouped in to "Jobs". Jobs run as series of Transformations sequentially (with error handling, state checking, etc.)

Load

There are several possible options for loading the transformed data into Qubit, each with pros and cons.

Write directly to MySQL

My initial instinct was to use the ETL tool to write directly to MySQL. This approach avoids the pain points we've had in the past with data load/import via the ORM: bad performance, large memory requirements and vexing segfaults.

However, This approach has proved difficult and time-consuming due to the highly relational nature of Qubit. To create one archival description record we must write to the following tables:

- information_object

- information_object_i18n

- note

- note_i18n

- object

- object_term_relation

- property

- property_i18n

- relation

- slug

- status

- term

- term_i18n

- ... more?

Many of these tables (e.g. property, note) require writing many rows per archival description.

In addition we've embedded the equivalent of SQL "triggers" in the Qubit ORM in an attempt to be database agnostic. Examples of this are the nested set logic, and slug name sanitization. Having a separate implementation of this logic in our ETL tool will make the transform fragile and very difficult to maintain.

- Thought: Can we run a php script after import to leverage the ORM and fire these "triggers"?

Import via EAD

We have experience with EAD import into ICA-AtoM so we are clear about it's limitations - high resource requirements (low performance and scalability), mapping ambiguities and an enforcement of one fonds/collection per EAD-XML file. However, the very fact that the problem space is well defined and that solutions will benefit the application as a whole (such as round-tripping) make EAD appealing.

Pro

- We already have EAD import done (with a few outstanding issues, see below)

- Don't have to build table hierarchy (e.g. object, actor, repository, slug) manually

- Data-model integrity and logic enforced by embedded ORM logic (don't have to replicate in transform step)

- Portable to any system that will import EAD

Con

- EAD format is a very detailed and finicky, and therefore time-consuming to implement

- Data in the Qubit schema that we can not represent in EAD? (maybe we can "bend" EAD to capture this data?)

- EAD import has several outstanding issues [6]

- Slow load, large memory requirements, segfaults?

- EAD enforces one top-level description per file, which makes bulk load very work-intensive (need to import many files)

Some initial research with the Pentaho Kettle ETL makes it clear that the Kettle XML output step will not support a highly structured XML format like EAD. This leaves us with several possible options:

- Write a simple XML file from Kettle, then use XLST to convert this intermediary file to EAD-XML

- Use e4x and Kettle's javascript capabilities to write EAD directly (e4x example)

- Write a simple XML file from Kettle then use another tool (Google refine?) to transform to EAD-XML

Automated http input

Another possibility is loading data using an HTTP POST to the web front-end, via CURL or another tool. Like the EAD import, this takes advantage of current data model logic embedded in Qubit ORM. This method also has the advantage over EAD import that we don't have to worry about mapping data from the source to EAD and then to Qubit, so we don't have to worry about what data EAD may not capture gracefully.

Automated form input may be especially useful for the digital object load, as we are currently doing in Archivematica. The big issues we've seen with the Archivematica digital object scripting is fragility of script due to UI changes in Qubit, and finding the URI for the related information object (how do we get the right slug?).

Matching URIs is actually a huge problem with automated form input in general - for example how do we easily find the URI for a related name access point to select the correct value in the "name access point" drop-down? Performance is also a serious concern here, because the page load times for creating/editing a single description can be greater than 5 seconds.

Post-load scripts

Several scripts must be run after data load to add secondary data that is essential for the Qubit application, but is not easily generated during #Transform.

Build nested set task

- Syntax

php symfony propel:build-nested-set

- Description

- Qubit uses the Nested set model for arranging hierarchical data. The 'build-nested-set' task generates the required column values (lft, rgt) for the loaded data, based on the parent_id self-relationship. Creating the nested set script was quite complex, as it requires recursively descending down the hierarchy tree to derive the proper lft and rgt values. This script could be useful outside of the context of migrations for re-building the nested set after corruption (e.g. due to direct editing of the database) or data loss.

Generate slugs task

- Syntax

php symfony propel:generate-slugs

- Description

- Qubit generates Slugs for creating permalinks to application resources. The 'generate-slugs' task generates these slugs for any resources that do not already have a slug. Because this script is non-destructive it can be run any time.

Digital object load task

- Syntax

php symfony digitalobject:load filename.csv

- Description

- Takes a CSV file as input which contains two 'columns': "filename" and "information_object_id"; the script will fail if these column headers are not present in the first row of the CSV file. The filename column contains the full (current) path to the digital asset (file). The information_object_id identifies the linked information object. Qubit does not allow more than one digital object per information object (with the exception of derivatives), and each digital object must have a corresponding information object to describe it, so this one-to-one relationship must be respected in the CSV import file.

2. Migration using CSV import

Extract

Most database applications have an export function to save data to a flat-file format. CSV and tab-delimited exports are especially ubiquitous, which is one of the reasons for using the CSV format.

Filemaker Pro 10

Pilot participants: SFU data migration

Filemaker has an advanced export function that allows export to many flat-file formats, including CSV, tab-delimited, XML and Excel (and others). In addition Filemaker allows the user to select exactly which fields are exported.

We used the "Excel" format for export as it includes column headers, which is not true of the CSV or tab-delimited formats.

Inmagic

Pilot participants: Anglican Archives data migration

Inmagic includes nice export functionality that allows specifying custom delimiters for field, line and multiple values within a field as well as string delimiters (e.g. quotes). Inmagic also allows exporting a found set of records, or the entire database and can include or exclude column headers.

We exported data using the standard CSV format with column headers.

MS Word

Pilot participants: University of Victoria data migration

TODO

MS Excel

MS Excel will save directly to a CSV or tab-delimited format.

Validate

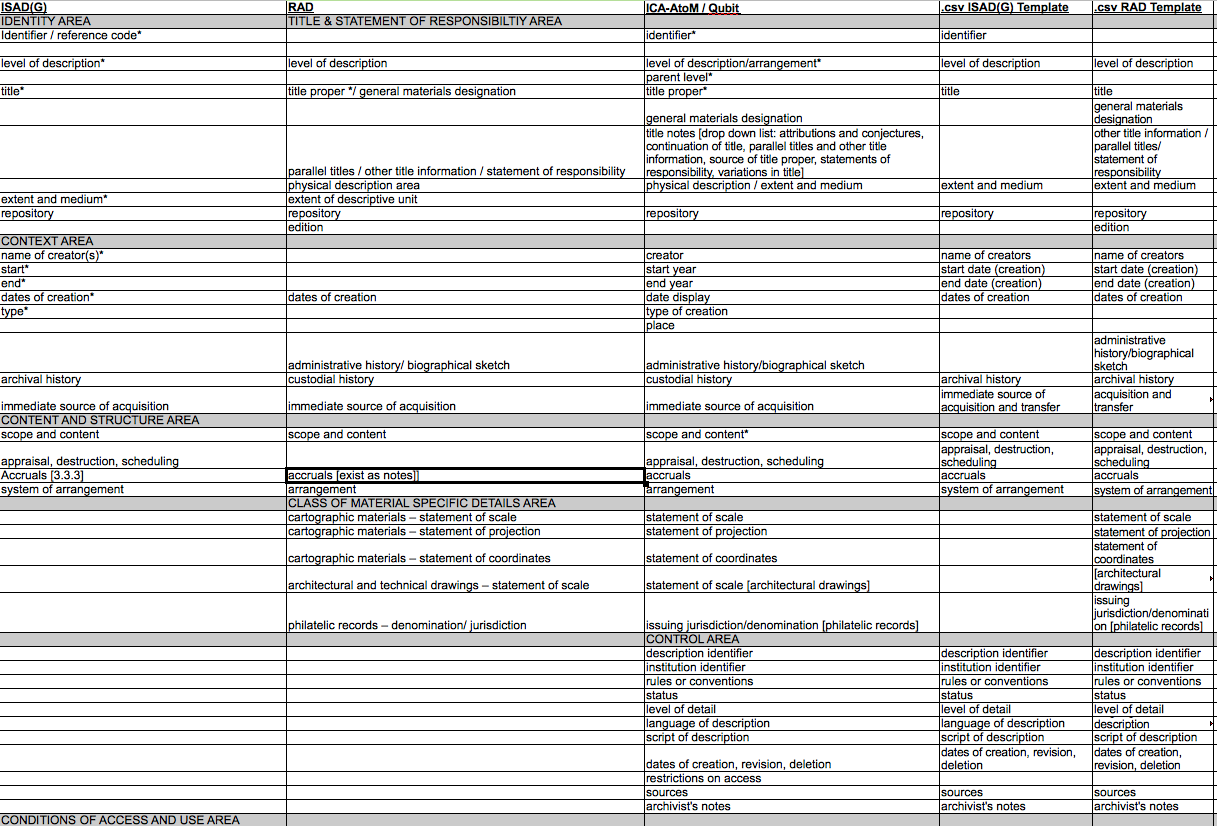

Two comma-separated value (CSV) file templates are provided, one for ISAD(G) and one for RAD. These files can be edited with any common spreadsheet application (Microsoft Excel, LibreOffice Calc, etc.) but must be saved in CSV format to be imported by ICA-AtoM.

It became clear that a Crosswalk between ISAD(G), RAD, ICA-AtoM, and our .csv template was needed to support Archivists beginning the process of cleaning-up & transferring their data into ICA-AtoM.

Here is a screen capture of the spreadsheet:

Download the whole spreadsheet for your own use:File:Crosswalk.ods

Transform

- Export data from legacy database as a comma separated values (CSV) text file format. CSV is used to store tabular data and can be accessed using a spreadsheet or database application

- Using the appropriate CSV template, compare legacy database CSV file with either .csv RAD template or .csv ISAD(G) template

- The templates are based on descriptive standards RAD and ISAD(G)

- Many legacy databases are populated with non-standardized data fields. When comparing column headers, the .csv template provides the standardized data fields and the legacy database CSV file may need to be rectified to match

- Rectify column headers in legacy database CSV file

- If necessary, merge data in more than one column to match individual column headers in specific .csv template

</br>

- Review CSV document for any anomalies in the data cells (e.g., misspelled names, inconsistent date formats, typos, incomplete records etc...)

- If data is inaccurate or inconsistent, Google Refine is an excellent tool for data clean-up